New Renderer (OpenGL 3.3)

-

Thanks!

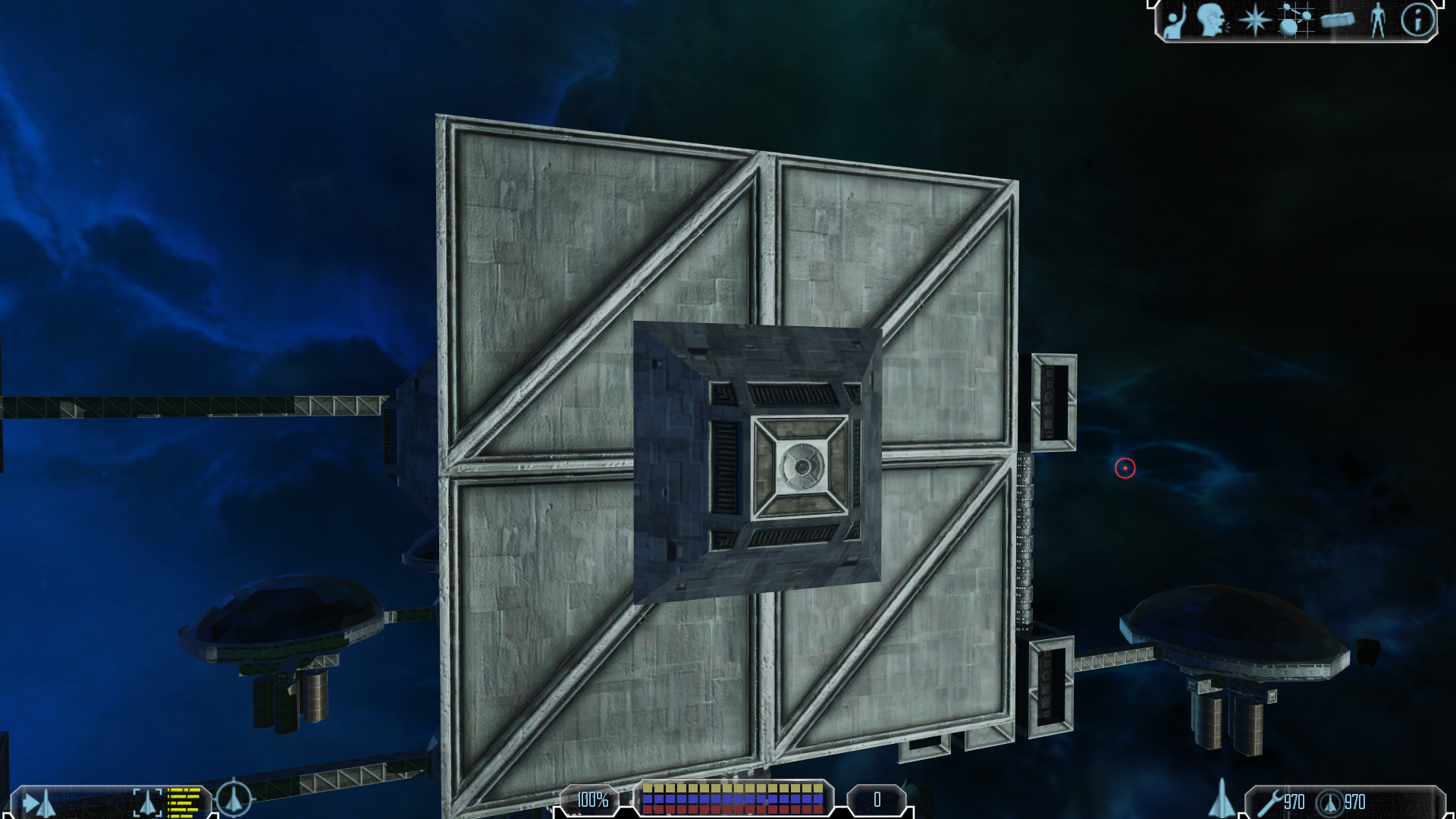

I was able to reverse the list of asteroids which are about to be rendered and integrate it into the shadow rendering. Also I now automatically generate the necessary TBN data for normal maps etc. on asteroids, because FL does not support saving them into the models.

The result can be seen in this video: http://www.flnu.net/downloads/fl1011.mp4

Costs a lot of FPS currently despite of instancing, so improving it is the next step.

-

TBN data could be put and spread across uv mapping vectors in VMeshData. FL materials don’t use more than two uv maps, so six more channels as defined by FVF are free for the taking. Some quantization techniques could be employed to compress into fewer bits. Though if the cost of generating them on the fly isn’t hitting performance it might not be as useful.

-

Thank you, I really like the look, too!

@Treewyrm: Thanks for the hints, but I know that. The problem is, that FL does not support more than two texture coordinates when rendering asteroids. So the only other option would be to load the model yourself instead of letting FL do it. This is an option for the future, since you also only can use one material, so it would be an improvement. For normal models, the TBN data already is stored into additional texture coordinates.

-

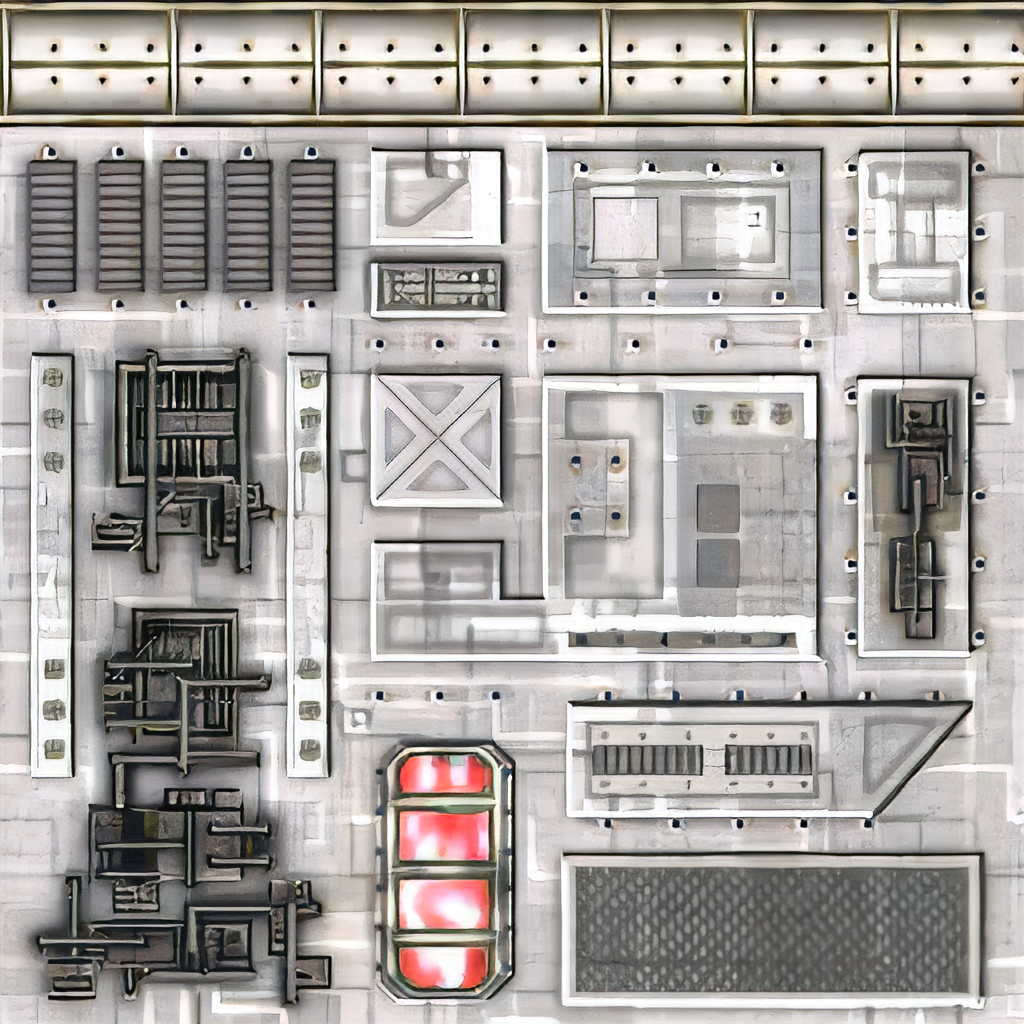

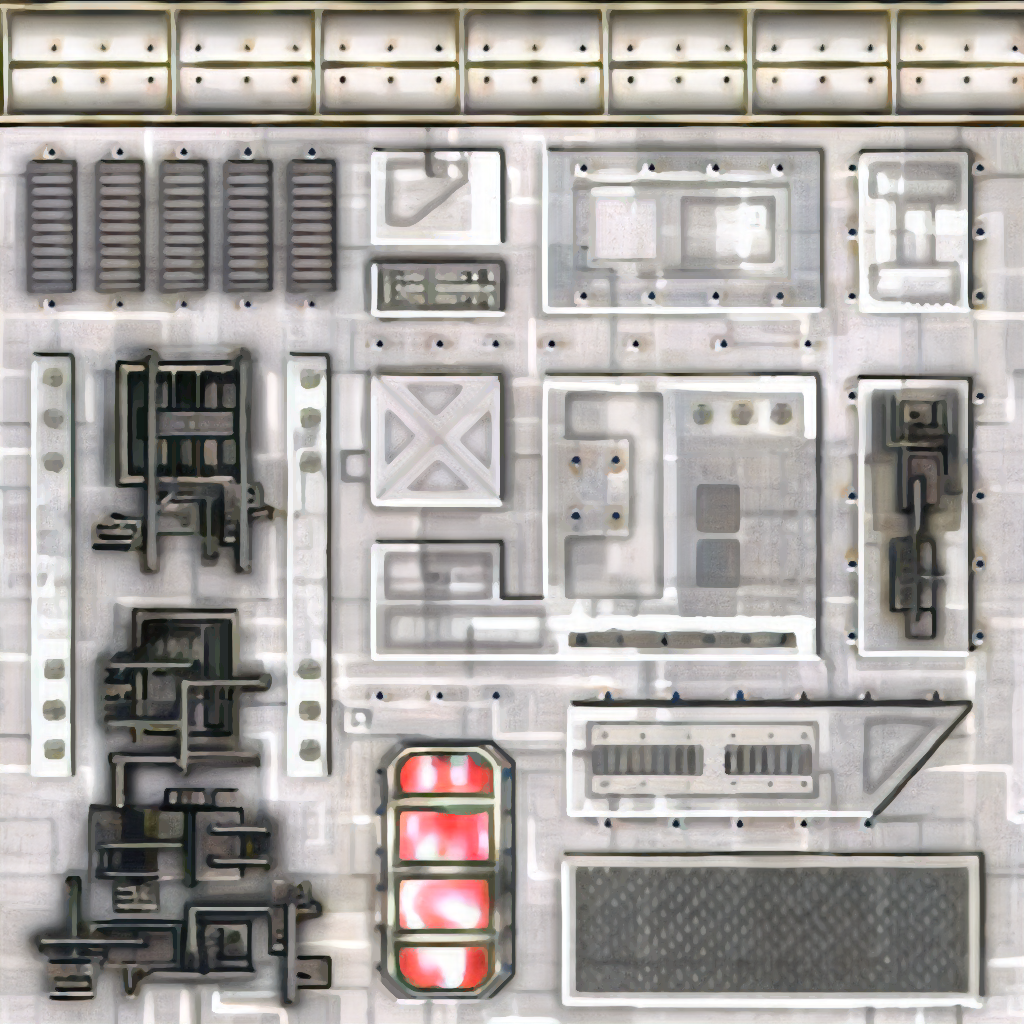

While I did some internal progress, here are the latest results of my texture upscaling attempts:

As you can see this takes the game to a whole new level. Some example videos are here:

http://www.flnu.net/downloads/FL2411.mp4

http://www.flnu.net/downloads/FL2411_2.mp4Keep in mind that the material settings are not tweaked yet, so there is still room for improvement. Currently there is only one different one for glass. The rest uses the same one where I quickly tested some values and chose the best looking. If you have a different taste you could make all reflections super smooth etc.

-

Is it ESRGAN, PPON, or your own model ?

I tried Misc x4 pretrained model for ESRGAN and the result was kinda good. Then I tried PPON and for some details it’s better than Misc x4. PPON can’t support alpha channel so I need to extract alpha channel with GIMP.

On the one hand edit is better than upscale but on the other hand upscale helps a lot if you want the best accuracy as possible.

Here is a sample of liberty AI upscale I did. I warn you that I had to do 128x128 parts from 256x256 textures cause my computer isn’t using CUDA cores and, as always, has outdated components… The window part has been done with PPON. liberty_256 is the original and 1024 is the Misc 4x one.

-

You first need to undo the dds compression. The textures above are done with ESRGAN and pretrained models (two steps, undo dds first and then upscaling). There are quite extensive lists of models you can find on the net. I will try other networks next (e.g. the one you suggested), but the current results are quite impressive. There are tools for ESRGAN which allow alpha upscaling, although the one I tested (IEU) only worked for one texture, not for mass conversion. I did not have time yet to debug that.

Here is the texture upscaled with the same method used in the videos: http://www.flnu.net/downloads/liberty2_1024.png

I did not have any problems with cuda, everything worked fine. But the chip in my laptop is not that old (maxwell). You also could use cpu only, although that sadly is quite slow.

If you get better results, let me know!

-

Schmackbolzen wrote:

You first need to undo the dds compression. The textures above are done with ESRGAN and pretrained models (two steps, undo dds first and then upscaling). There are quite extensive lists of models you can find on the net. I will try other networks next (e.g. the one you suggested), but the current results are quite impressive. There are tools for ESRGAN which allow alpha upscaling, although the one I tested (IEU) only worked for one texture, not for mass conversion. I did not have time yet to debug that.Here is the texture upscaled with the same method used in the videos: http://www.flnu.net/downloads/liberty2_1024.png

I did not have any problems with cuda, everything worked fine. But the chip in my laptop is not that old (maxwell). You also could use cpu only, although that sadly is quite slow.

If you get better results, let me know!

I already used BC1_Smooth2 but the 1024 lost his tag while renaming. (Always disliked these artifacts…)

I just saw the alpha model option while I recovered IEU due to Windows update fail 2 weeks ago. I put the Skyrim one.

I added the PPON one to the Mediafire shared folder. I think edges sharpness are great but details are weird compared to ESRGAN 4xMisc. *_PPON is the right one.

What pretrained models did you use ?

-

The one I found best and used on all the images is the ground one. I also tested the misc one and the box one, but they either looked weird or too clean. For removing the dds artefacts I also found the one you use the best one yet. I will try different models/networks as soon I have time for it.

-

I have a fun fact about BC1 compression artifact removal.

When you use BC1_smooth2 and use misc the result is not that detailed, but when you use another BC1 removal then misc, it’s over detailed.

It’s rather strange.

Edit : Mixed BC1_smooth opacity 128 + BC1_take2 Background

Edit 2 : Fixed URL of “Edit”

-

I noticed that you can mix models, so I ended up using a hybrid between the fallout weapons and ground textures models (you have to stay above 0.7, otherwise you will get colour shifts).

Also I developed an algorithm to automatically create roughness maps. The result can be seen in this video:

http://www.flnu.net/downloads/Fl1501.mp4There are still some small issues left. One of them is, that somehow most of the time I find it too dark. Will be one of the next things I try to fix.

-

I have an idea too. Removing all bevel and shading.

Here is the principle :

Take a 3D model creator.

Create a plane.

Put a random normal map on the plane where some shapes are put, squares, triangles, etc.

Put a camera above the plane.

Add a light source.

Animate the light source to discribe a circle around the plane at different light elevation for exemple light elevation 0° then 10° and so on.

Take an uncompressed video of each light elevation.

After decompose the video(s) in PNG pictures.

Then with the same camera take a video of the same length without the normal map and decompose it in series of PNG pictures.

Put all normal map pics in lowres folder of AI upscale and put the not normal map pics in reference images of AI upscaler.

Finally, train and see the result on real images.If it works, it would be nice. Because it could be applied to every game textures that has prerendered texture effects.

-

(Sorry for the long delay, was busy plus got a bad cold.)

Yeah, I see what you want to do, and I was hoping there was a way. I would use it for automatic height map creation. But for my understanding, you would need different neural network layouts for that. Btw. there is a network which creates normal maps in the wiki. I tested it, but it creates weird results (probably not good training data).

I already tried training my network with height map data, but the cnns I used seemed not to work with it. When I have the time I will try some more stuff, probably using ESRGAN.

Regarding the wrapper:

I was able to change the rendering pipeline to be nearly like: https://learnopengl.com/PBR/Theory

I don’t use any AO maps, since the bent normal maps already create a nice effect. I also changed the lights to be sphere lights and not point lights, since the suns are quite large. The latter is from UE4 engine presentation notes plus some annoying testing, since the general implementations I found seem to be incorrect and the notes are not clear on the equations.In general I noticed most of the stuff I use now is from UE4 (if they still use the stuff).

Here is a video from a few days later back in January: http://www.flnu.net/downloads/Fl1901.mp4 In the end I disable the environment maps so that one can compare. It looks really good now in my opinion.

Basically I am now hunting the last bugs, which sadly are not that easy to find. Plus I don’t have that much time currently.

-

I found this.

I tried but I don’t know how to make it work. I think it can help to seperate heights and normals (not normal maps).

I tried to remove shadows and highlights with GIMP but the result was never good, as in the original shadow details are missed as you can see in Defender’s texture. I had a converstation with somebody on GameUpscale Discord and he gave me some Imagemagicks .sh delight and deshadow scripts. It worked but there is a sort of bloom after applying them…

-

Sorry for double post but WOW ! Just WOW !

https://www.youtube.com/watch?v=548sCh0mMRc&feature=emb_title

-

Looks interesting, but I think they still have a long way to go.

Last weekend I had time to test an idea I had for a while. Took me the whole Saturday to finish it, but the results are way better than I expected. Basically I use the generated normal maps to smooth the textures, so that I lose the details. This actually is correct, since the details are in the normal map. Before I had to downscale the impact of the normal maps, because it would look very weird. Now I can have stronger normal maps and it looks really good.

Here is a video of the result: http://www.flnu.net/downloads/fl1503.mkv

The codec is h265 this time, because with h264 it was about 250mb. Took me a while to find good settings, but now it only has about 120mb and looks quite the same.

Edit: Here is a second, shorter video, where you also can see the more 3D look of the textures:

http://www.flnu.net/downloads/fl1503_2.mp4 -

Nice ! I had the same idea but I used crazybump normal map generator and render the nrms in Paint.net but it was not good. Is there a way to have the detail removal without using normal maps ? It’s just to see how effective it is.

BTW I think modelizing gribles would be good. I already started Bretonia capships. Then with station, importing original model and after place gribles, then remove station model, export grible. Add an hardpoint called HP_gribles to station CMP at the position 0;0;0. Make an equipment entry for gribles and finally add a code line in station loadout :

equip = "Station"_gribles, Hp_griblesIt can also be done for ships.

Do you think it’s a good idea ?

-

I don’t know what you mean with “gribles”. Can you elaborate?

Here is an example of the smoothing:

Before:

After:

As you can see, it only removes the smaller edges. That’s why I wasn’t expecting it to work as well as it does. Also I did write it more as a proof of concept. There is still some room for improvement.

For the larger ones I do have an idea for an algorithm, but this is for later when I have the time for it. -